Profile

|

Publications

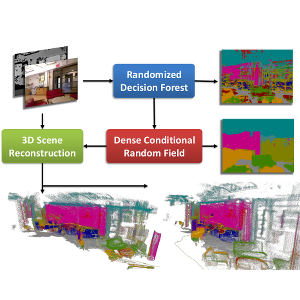

Dense 3D Semantic Mapping of Indoor Scenes from RGB-D Images

Dense semantic segmentation of 3D point clouds is a challenging task. Many approaches deal with 2D semantic segmentation and can obtain impressive results. With the availability of cheap RGB-D sensors the field of indoor semantic segmentation has seen a lot of progress. Still it remains unclear how to deal with 3D semantic segmentation in the best way. We propose a novel 2D-3D label transfer based on Bayesian updates and dense pairwise 3D Conditional Random Fields. This approach allows us to use 2D semantic segmentations to create a consistent 3D semantic reconstruction of indoor scenes. To this end, we also propose a fast 2D semantic segmentation approach based on Randomized Decision Forests. Furthermore, we show that it is not needed to obtain a semantic segmentation for every frame in a sequence in order to create accurate semantic 3D reconstructions. We evaluate our approach on both NYU Depth datasets and show that we can obtain a significant speed-up compared to other methods.

@inproceedings{Hermans14ICRA,

author = {Alexander Hermans and Georgios Floros and Bastian Leibe},

title = {{Dense 3D Semantic Mapping of Indoor Scenes from RGB-D Images}},

booktitle = {International Conference on Robotics and Automation},

year = {2014}

}

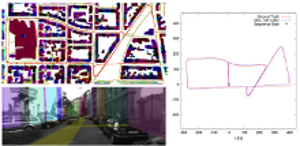

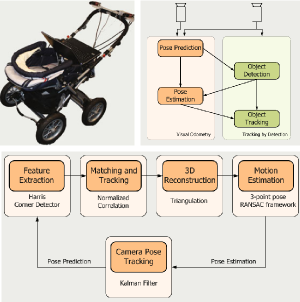

OpenStreetSLAM: Global Vehicle Localization Using OpenStreetMaps

In this paper we propose an approach for global vehicle localization that combines visual odometry with map information from OpenStreetMaps to provide robust and accurate estimates for the vehicle’s position. The main contribution of this work comes from the incorporation of the map data as an additional cue into the observation model of a Monte Carlo Localization framework. The resulting approach is able to compensate for the drift that visual odometry accumulates over time, significantly improving localization quality. As our results indicate, the proposed approach outperforms current state-ofthe- art visual odometry approaches, indicating in parallel the potential that map data can bring to the global localization task.

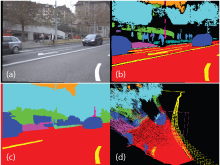

Joint 2D-3D Temporally Consistent Semantic Segmentation of Street Scenes

In this paper we propose a novel Conditional Random Field (CRF) formulation for the semantic scene labeling problem which is able to enforce temporal consistency between consecutive video frames and take advantage of the 3D scene geometry to improve segmentation quality. The main contribution of this work lies in the novel use of a 3D scene reconstruction as a means to temporally couple the individual image segmentations, allowing information flow from 3D geometry to the 2D image space. As our results show, the proposed framework outperforms state-of-the-art methods and opens a new perspective towards a tighter interplay of 2D and 3D information in the scene understanding problem.

Multi-Class Image Labeling with Top-Down Segmentation and Generalized Robust P^N Potentials

We propose a novel formulation for the scene labeling problem which is able to combine object detections with pixel-level information in a Conditional Random Field (CRF) framework. Since object detection and multi-class image labeling are mutually informative problems, pixel-wise segmentation can benefit from powerful object detectors and vice versa. The main contribution of the current work lies in the incorporation of topdown object segmentations as generalized robust P N potentials into the CRF formulation. These potentials present a principled manner to convey soft object segmentations into a unified energy minimization framework, enabling joint optimization and thus mutual benefit for both problems. As our results show, the proposed approach outperforms the state-of-the-art methods on the categories for which object detections are available. Quantitative and qualitative experiments show the effectiveness of the proposed method.

@inproceedings{DBLP:conf/bmvc/FlorosRL11,

author = {Georgios Floros and

Konstantinos Rematas and

Bastian Leibe},

title = {Multi-Class Image Labeling with Top-Down Segmentation and Generalized

Robust {\textdollar}P{\^{}}N{\textdollar} Potentials},

booktitle = {British Machine Vision Conference, {BMVC} 2011, Dundee, UK, August

29 - September 2, 2011. Proceedings},

pages = {1--11},

year = {2011},

crossref = {DBLP:conf/bmvc/2011},

url = {http://dx.doi.org/10.5244/C.25.79},

doi = {10.5244/C.25.79},

timestamp = {Wed, 24 Apr 2013 17:19:07 +0200},

biburl = {http://dblp.uni-trier.de/rec/bib/conf/bmvc/FlorosRL11},

bibsource = {dblp computer science bibliography, http://dblp.org}

}

Real Time Vision Based Multi-person Tracking for Mobile Robotics and Intelligent Vehicles

In this paper, we present a real-time vision-based multiperson tracking system working in crowded urban environments. Our approach combines stereo visual odometry estimation, HOG pedestrian detection, and multi-hypothesis tracking-by-detection to a robust tracking framework that runs on a single laptop with a CUDA-enabled graphics card. Through shifting the expensive computations to the GPU and making extensive use of scene geometry constraints we could build up a mobile system that runs with 10Hz. We experimentally demonstrate on several challenging sequences that our approach achieves competitive tracking performance.

@inproceedings{DBLP:conf/icira/MitzelFSZL11,

author = {Dennis Mitzel and

Georgios Floros and

Patrick Sudowe and

Benito van der Zander and

Bastian Leibe},

title = {Real Time Vision Based Multi-person Tracking for Mobile Robotics and

Intelligent Vehicles},

booktitle = {Intelligent Robotics and Applications - 4th International Conference,

{ICIRA} 2011, Aachen, Germany, December 6-8, 2011, Proceedings, Part

{II}},

pages = {105--115},

year = {2011},

crossref = {DBLP:conf/icira/2011-2},

url = {http://dx.doi.org/10.1007/978-3-642-25489-5_11},

doi = {10.1007/978-3-642-25489-5_11},

timestamp = {Fri, 02 Dec 2011 12:36:17 +0100},

biburl = {http://dblp.uni-trier.de/rec/bib/conf/icira/MitzelFSZL11},

bibsource = {dblp computer science bibliography, http://dblp.org}

}