Pose Occlusion Dataset

Data Set

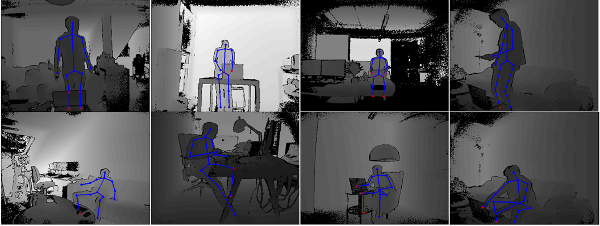

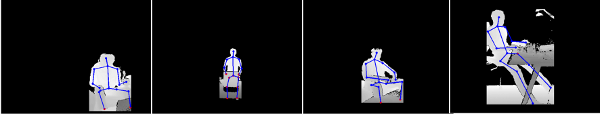

The dataset consists of 1000 depth images of partially occluded people. The dataset is recorded using the Kinect 2 sensor in different indoor environments, offices and living rooms, and consists of seven different subjects in different sitting and standing poses from different viewpoints. The dataset is further split into test and validation set with 800 and 200 images, respectively. Each image in the dataset consists of a single person partially occluded by objects like table, laptop and monitor. Each image is annotated with 2D ground-truth positions of 15 body joints and their visibility. Noisy Foreground mask for each image is also provided. Example images and foreground masks from the data set are shown below. The occluded joints are shown in red.

Example Images from dataset.

Example Foreground masks from dataset.

Usage

Run the matlab script demo.m provided with the data set for a demo on how to load the images with annotated joints.

Download

Paper

If you use this dataset for research purposes please cite the paper

U. Rafi, J. Gall, and B. Leibe, A Semantic Occlusion Model for Human Pose Estimation from a Single Depth Image, in CVPR 2015 Chalearn Looking at People Workshop.

@InProceedings{RafiChalearn, title = {{A Semantic Occlusion Model for Human Pose Estimation from a Single Depth Image}}, author = {U. Rafi and J.Gall and B. Leibe}, booktitle = {{in CVPR 2015 Chalearn Looking at People Workshop}}, year = 2015 }

If you have any questions regarding the dataset contact Umer Rafi.